Evaluating the efficiency of enormous language mannequin (LLM) inference techniques utilizing typical metrics presents important challenges. Metrics comparable to Time To First Token (TTFT) and Time Between Tokens (TBT) don’t seize the entire person expertise throughout real-time interactions. This hole is vital in purposes like chat and translation, the place responsiveness instantly impacts person satisfaction. There’s a want for a extra nuanced analysis framework that totally encapsulates the intricacies of LLM inference to make sure optimum deployment and efficiency in real-world eventualities.

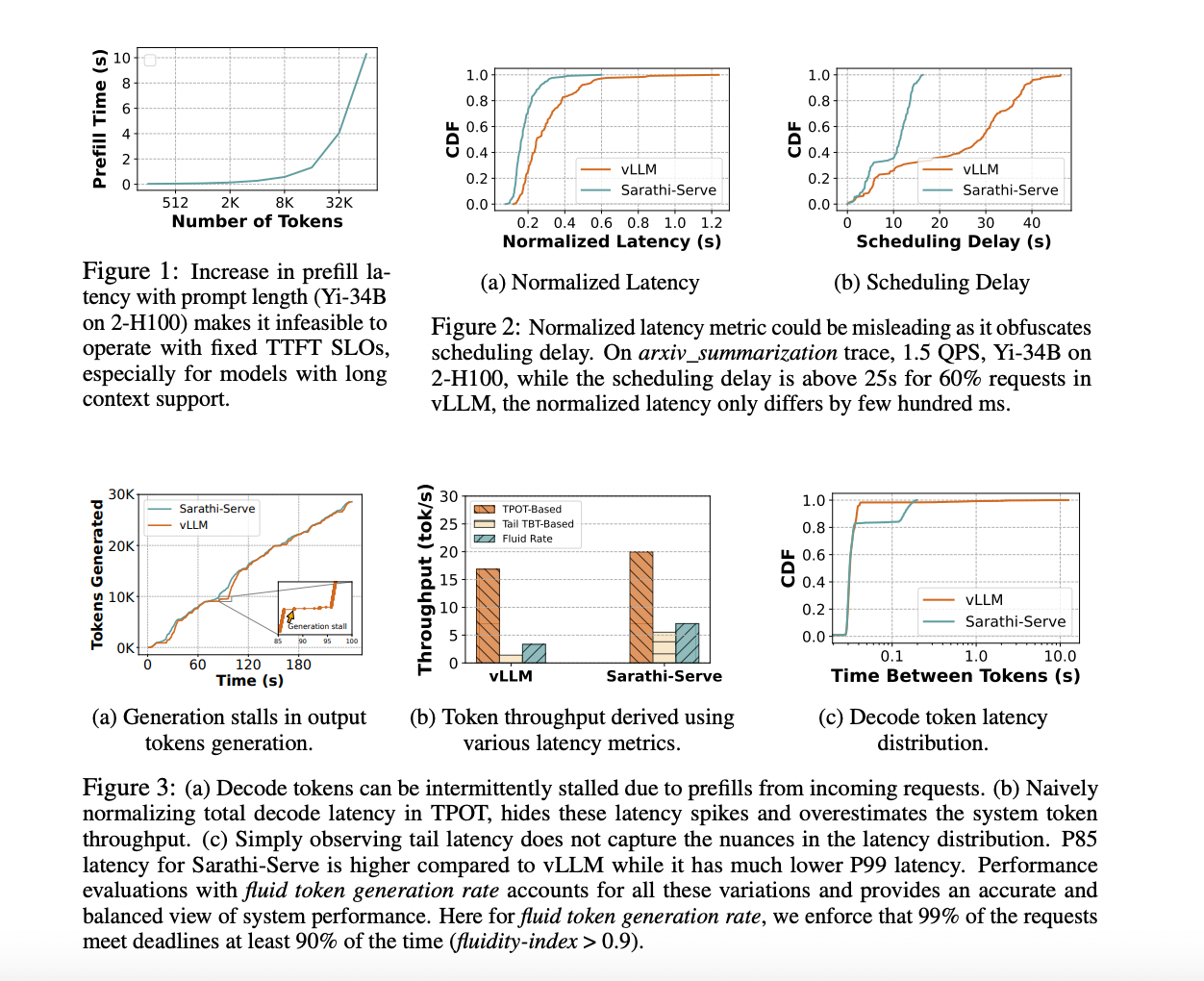

Present strategies for evaluating LLM inference efficiency embody TTFT, TBT, normalized latency, and Time Per Output Token (TPOT). These metrics assess numerous elements of latency and throughput however fall quick in offering a complete view of the person expertise. For instance, TTFT and TBT give attention to particular person token latencies with out contemplating end-to-end throughput, whereas normalized metrics obscure points like inter-token jitter and scheduling delays. These limitations hinder their effectiveness in real-time purposes the place sustaining a easy and constant token era fee is essential.

A workforce of researchers from Georgia Institute of Know-how, Microsoft Analysis India, and Intel AI Lab suggest Metron, a complete efficiency analysis framework. Metron introduces novel metrics such because the fluidity-index and fluid token era fee, which seize the nuances of real-time, streaming LLM interactions. These metrics contemplate the temporal elements of token era, guaranteeing a extra correct reflection of user-facing efficiency. By setting token-level deadlines and measuring the fraction of deadlines met, the fluidity-index offers a exact definition of person expertise constraints. This strategy represents a big contribution by providing a extra correct and user-centric analysis technique.

Metron’s fluidity-index metric units deadlines for token era primarily based on desired TTFT and TBT values, adjusting these primarily based on immediate size and noticed system efficiency. This technique accounts for scheduling delays and variable token era charges, guaranteeing easy output. The framework evaluates each open-source and proprietary LLM inference techniques, making use of the fluidity-index to measure the share of deadlines met and dynamically adjusting deadlines primarily based on real-time efficiency. This technique affords a complete view of the system’s capability to deal with person requests with out compromising responsiveness.

Metron offers a extra correct analysis of LLM inference techniques in comparison with typical metrics. The fluidity-index and fluid token era fee reveal important variations in person expertise that aren’t captured by TTFT or TBT alone. For instance, the analysis of techniques like vLLM and Sarathi-Serve demonstrated that Sarathi-Serve achieved fewer deadline misses and better fluidity. The findings present that Sarathi-Serve maintained a fluidity-index > 0.9 for 99% of requests, attaining a throughput of 600 tokens per second, whereas vLLM confirmed a 3x worse tail TBT as a consequence of era stalls. This demonstrates Metron’s effectiveness in revealing efficiency variations and guaranteeing higher person experiences in real-world purposes.

In conclusion, this proposed technique, Metron, introduces a novel analysis framework, together with the fluidity-index and fluid token era fee metrics, to higher assess LLM inference efficiency. This strategy overcomes the restrictions of typical metrics by offering a user-centric analysis that captures the intricacies of real-time token era. The findings reveal Metron’s effectiveness in revealing efficiency variations and its potential impression on bettering LLM serving frameworks, guaranteeing higher person experiences in real-world purposes.

Take a look at the Paper and GitHub. All credit score for this analysis goes to the researchers of this mission. Additionally, don’t neglect to observe us on Twitter.

Be a part of our Telegram Channel and LinkedIn Group.

When you like our work, you’ll love our e-newsletter..

Don’t Overlook to hitch our 46k+ ML SubReddit

Aswin AK is a consulting intern at MarkTechPost. He’s pursuing his Twin Diploma on the Indian Institute of Know-how, Kharagpur. He’s captivated with knowledge science and machine studying, bringing a powerful tutorial background and hands-on expertise in fixing real-life cross-domain challenges.